Multimodality represents the most effective and comprehensive form of information

representation in the real world. Humans naturally integrate various data types, such as

text, audio, images, videos, touch, depth, 3D, animations, and biometrics, to make accurate

perceptions and decisions. As a result, Our digital world is inherently multimodal.

Multimodal data analytics often outperform single-modal approaches in addressing complex

real-world challenges. Additionally, the fusion of multimodal sensor data a growing area of

interest,

particularly for industries like automotive, drone vision, surveillance, and robotics,

particularly for

industries like automotive, drone vision, surveillance, and robotics, where automation

relies on integrating diverse control signals from multiple sources.

The rapid advancement of Big Data technology and its transformative applications across

various fields make multimodal Artificial Intelligence (AI) for Big Data a highly relevant

and timely topic. This workshop aims to generate momentum

around this topic of growing interest, and to encourage interdisciplinary interaction and

collaboration between Natural Language Processing (NLP), computer vision, audio

processing, machine learning, multimedia, robotics, Human-Computer Interaction (HCI),

social computing, cybersecurity, cloud computing, edge compputing,

Internet of Things (IoT), and geospatial computing communities. It serves as a

forum to bring together active researchers and practitioners from academia and industry to

share

their insights and recent advancements in this promising area.

MMAI 2024 Accepted Final Papers & Program Schedule

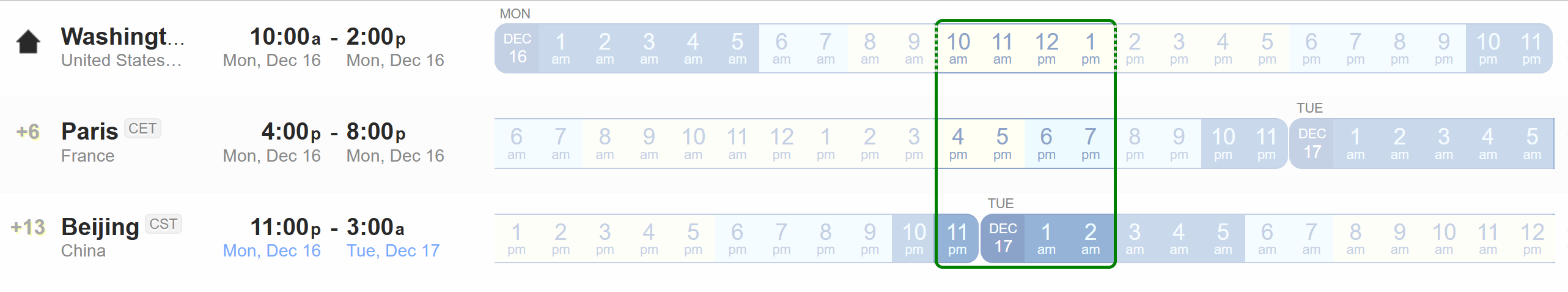

Dec. 16, 2024, Washington DC (GMT-5) between 10:15am - 1:20pm

Virtually: Please join IEEE Big Data Workshop - MMAI 2024 through the link shared with you via email.

Physically: Hyatt Regency Washington on Capitol Hill, Conference Room - Yosemite (2nd Floor)

| Time | Mode | Type | Page | Paper Title | Author(s) |

| Opening Remarks (Washington DC Time 10:15am) | |||||

| 10:15-10:25 | Online | Poster | 4 | MixMAS: A Framework for Sampling-Based Mixer Architecture Search for Multimodal Fusion and Learning | Abdelmadjid Chergui, Grigor Bezirganyan, Sana Sellami, Laure Berti-Équille, and Sébastien Fournier |

| 10:25-10:35 | Online | Poster | 6 | Automated Interpretation of Non-Destructive Evaluation Contour Maps Using Large Language Models for Bridge Condition Assessment | Viraj Darji, Callie Liao, and Duoduo Liao |

| 10:35-10:50 | Online | Full | 10 | Animating the Past: Reconstruct Trilobite via Video Generation | Xiaoran Wu, Zien Huang, and Chonghan Yu |

| 10:50-11:05 | Online | Full | 10 | Disentangled Prompt Learning for Transferable, Multimodal, Few-Shot Image Classification | John Yang, Alessandro Magnani, and Binwei Yang |

| 11:05-11:20 | In-person | Full | 10 | Adaptive Signal Analysis for Automated Subsurface Defect Detection Using Impact Echo in Concrete Slabs | Deepthi Pavurala, Duoduo Liao, and Chaithra Reddy Pasunuru |

| 11:20-11:30 | In-person | Poster | 5 | On the Effectiveness of Text and Image Embeddings in Multimodal Hate Speech Detection | Nora Lewis, Charles Casimiro Cavalcante, Zois Boukouvalas, and Roberto Corizzo |

| 11:30-11:40 | In-person | Poster | 4 | Geospatial Data and Multimodal Fact-Checking for Validating Company Data | Susanne Walter, Gabriela Alves Werb, Lisa Reichenbach, Patrick Felka, and Ece Yalcin-Roder |

| 11:40-11:50 | In-person | Poster | 4 | Random Forest-Supervised Manifold Alignment | Jake Slater Rhodes and Adam G. Rustad |

| 11:50-12:02 | In-person | Short | 9 | Dynamic Intelligence Assessment: Benchmarking LLMs on the Road to AGI with a Focus on Model Confidence | Norbert Tihanyi, Tamas Bisztray, Richard A. Dubniczky, Rebeka Toth, Bertalan Borsos, Bilel Cherif, Ridhi Jain, Lajos Muzsai, Mohamed Amine Ferrag, Ryan Marinelli, Lucas C. Cordeiro, Merouane Debbah, Vasileios Mavroeidis, and Audun Josang |

| 12:02-12:14 | In-person | Short | 8 | Event-Based Multi-Modal Fusion for Online Misinformation Detection in High-Impact Events | Javad Rajabi, Sunday Okechukwu, Ahmad Mousavi, Roberto Corizzo, Charles Cavalcante, and Zois Boukouvalas |

| 12:14-12:26 | In-person | Short | 7 | Multimodal Threat Evaluation in Simulated Wargaming Environments | Pierre Vanvolsem, Koen Boeckx, and Xavier Neyt |

| 12:26-12:38 | Online | Short | 9 | A Multimodal Fusion Framework for Bridge Defect Detection with Cross-Verification | Ravi Datta Rachuri, Duoduo Liao, Samhita Sarikonda, and Datha Vaishnavi Kondur |

| 12:38-12:50 | In-person | Short | 9 | InfoTech Assistant : A Multimodal Conversational Agent for InfoTechnology Web Portal Queries | Sai Surya Gadiraju, Duoduo Liao, Akhila Kudupudi, Santosh Kasula, and Charitha Chalasani |

| 12:50-13:00 | In-person | Poster | 4 | Multimodal Deep Learning for Online Meme Classification | Stephanie Han, Sebastian Leal-Arenas, Eftim Zdravevski, Charles Casimiro Cavalcante, Zois Boukouvalas, and Roberto Corizzo |

| 13:00-13:10 | In-person | Poster | 5 | Natural Language Querying on NoSQL Databases: Opportunities and Challenges | Wenlong Zhang, Tian Shi, and Ping Wang |

| 13:10-13:20 | In-person | Poster | 6 | Estimating the Identity of Satoshi Nakamoto using Multimodal Stylometry | Glory Adebayo and Roman Yampolskiy |

| Closing Remarks (Washington DC Time 13:20pm) | |||||

*The program schedule is subject to change.